‘Neural Network’ is a term becoming very popular in the computing business at the moment. Big technology companies are sinking large sums of money into researching, building, and harnessing neural networks. But what exactly are they and what are they used for?

An Introduction to Neural Networks

A quick Google search will instantly tell you that neural networks not only refer to computing but also to biology. In fact, the neural networks in computing are attempts at mimicking neural networks in biology. Therefore, when talking in terms of technology, we call them artificial neural networks (ANN).

In biological neural networks, series of interconnected neurons transmit signals between the brain and the rest of the body. Each neuron in this network receives signals from other neurons and – if the sum of the signals goes above a specific threshold – it transmits a signal to other neurons it is connected to. With this basic principle in mind, let’s dive straight into how this can be modeled in computers.

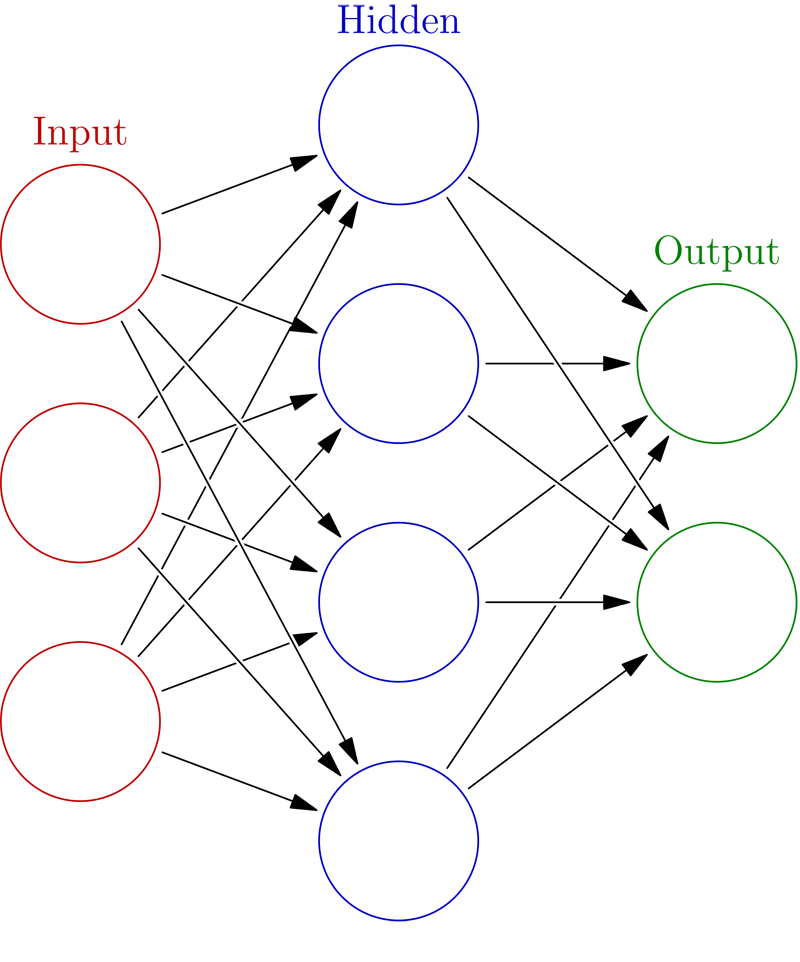

When neurons are created in an ANN, they are assigned weights which determine how they interact with other neurons. These weights simulate how much an incoming signal will affect the output of a given neuron. Neurons are often grouped into layers that the input signals flow through. In a basic feed-forward network for example, you will find three layers: one for input, one hidden layer that combines signals from the input layer and finally the output layer that summarises the output from the hidden layer. Each neuron will receive a collection of signals, perform a calculation, and pass the resulting figure on. Most networks are fully connected, meaning all of the neurons in one layer are connected to all neurons in the layers to either side of it.

Please see the diagram below for an example of a connected network.

An ANN on its own isn’t much use. The value comes when the weightings have been tuned to a point where the input signals produce a correct output. To take an example from the biological world, if you hit yourself in the thumb with the hammer (really hard) you should perceive pain in the thumb and not your toe (unless you then dropped the hammer on it).

ANNs can be trained by providing examples and expected outcomes. Measuring the error (the difference between the output and the expected outcome) this can be fed back into the network and the weightings adjusted with the aim of decreasing the error margins. There are cases of over training and under training but a correctly trained network should be able to accurately evaluate input and provide the correct output. An example of a learning process in a feed-forward network is called back propagation.

If you want to learn more about the specifics of ANNs then please visit Neural Networks and Deep Learning.

Down to the Geek Stuff

One of my colleagues recently went to a conference revolving around artificial intelligence and became hooked on ANN. The following Wednesday a few members of the APL community met on our weekly talk (hosted at appear.in/theaplroom on Wednesdays at 3pm) and there was lots of discussion on ANNs and how one might go about implementing one along with the most suitable language to do it in.

I was intrigued by what he had to say and started to think about building one. Since we were discussing APL’s suitability for the construction of an ANN I thought I would give it a try and see how it performs.

I modeled my work around a character recognition ANN I had seen not long before. The concept was pretty simple; take a 4×5 block of pixels where ‘1’ was a filled pixel and ‘0’ was an empty pixel and try to determine what number that block represents by looking at all of the filled pixels.

From my basic knowledge of ANN I knew I needed to have 3 things: Neurons, Layers, and a Network.

The neurons would perform the calculations on the data. The layers would group together the neurons dedicated to doing a certain job. The network would tie all of this together.

I decided I wasn’t going to write it completely from scratch and trawled the internet to find a few nice code samples that I would rework and turn into my own ANN. After re-writing the majority of the code into APL I had my ANN and it had all 3 of the requirements.

The next thing was to test it. There were two tests I wrote to test the neural network, they were a character recognition test that I described earlier and a logic gate test where the network should evaluate inputs and perform an XOR operation on them. Overall the network was pretty accurate after training it for a while and managed to evaluate the input correctly and display the output desired.

So What Next?

The reason for me writing this article is that I would love to see some slimmed down and more efficient ANNs. Mine is slow and isn’t always the best at evaluating. I have posted my code on GitHub.

So far I have had a couple of responses and have merged a much simpler, faster, and efficient solution into the repository. However as well as having all those features I would like to have a final product that will be easily picked up by newcomers and understood.

Take a look and let me know what you think!